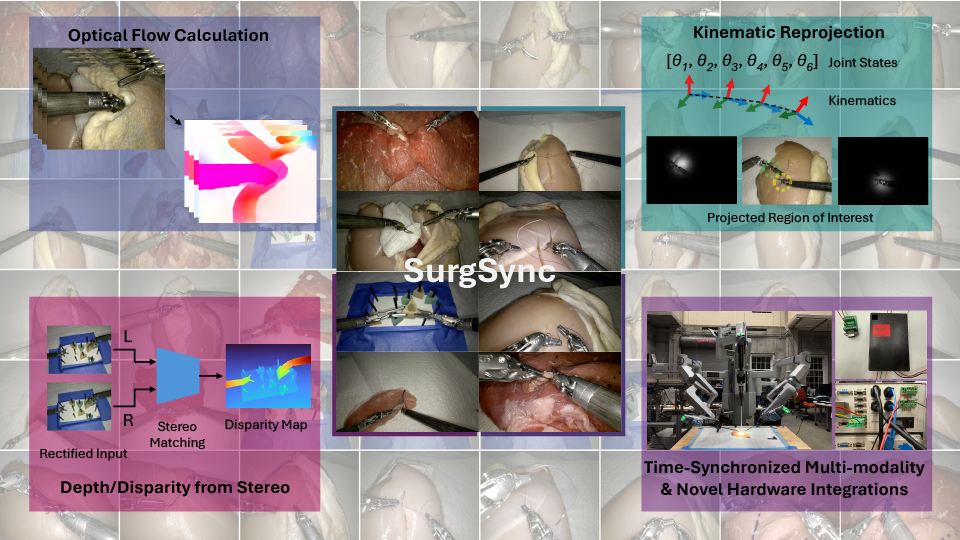

SurgSync: Time-Synchronized Multi-modal Data Collection Framework and Dataset for Surgical Robotics

Currently under review, submitted in 2025

Overview

This research represents Multi-Modal Time-Synchronized Data Collection and Post-Processing Framework for Surgical Robotics, along with datasets and AI-driven applications (stay tuned!).

Key Contributions

- Lead and manage 8-person cross-functional team, delivering IRB-compliant data collection and analysis that accelerated publication timeline and demo readiness

- Build time-synchronized (multi-modality sync time latency within 10ms) multi-modal data collection pipeline (vision + kinematics + sensor) and large (100+ instances) ex-vivo dataset

- Enable a novel kinematic projection approach and downstream optical flow/depth estimation using deep learning

- Design and implement a custom capacitive contact sensor to acquire the ground truth of tool-tissue contact

- Integrate a modern chip-on-tip endoscope with the dVRK seamlessly, enabling high-quality image data acquisition

- Design a novel data annotation application with graphic user interface (GUI) using PyQt for manual label annotations

- The data collection pipeline has been employed for efforts on Open-H-Embodiment

Future/Ongoing Work

- Investigate surgical robot tool-tissue contact detection using a multi-modal deep learning approach.

© 2026 Jack (Haoying) Zhou. Website built and customized by Jack (Haoying) Zhou from the academicpages template. Please let me know if you notice any glitches.